ChatGPT Gives Users More Control Over Their Chat History

ChatGPT users may be pleased to know that they now have a little bit more privacy. OpenAI, which created the well-known language model, has added an option to "turn off" the chatbot's history. When history is turned off, conversations won't be saved, previous chats won't appear in the sidebar, and OpenAI won't use your conversations to further train its chatbot. This brings the experience a bit closer to how Google Bard currently works, though ChatGPT users can turn their history back on whereas Bard users are still unable to save their conversations.

Some users already have the option of turning their history off, while others may have to wait a little longer as OpenAI gradually rolls out the new feature. If you turn off your history but start missing the ability to read through your previous chat, don't worry. You can toggle the save history option back on and the bot will start saving your chats again. OpenAI says the whole process gives users more privacy, and is far simpler than the current "opt-out" system it was previously using.

Users who are curious to know what information OpenAI already has on them can also opt to "export" their data. After the request is filed, you'll receive an email containing all of the info OpenAI has on you. However, don't think anything you type will be beyond the prying eyes of OpenAI's staff once your chat history is toggled off. Things aren't as simple as that.

OpenAI can still view your chats, even with the setting enabled

The new setting, if enabled, will stop users from being able to go back and read their previous chats — and OpenAI says it won't use data from said chats to train its AI model. But things aren't quite as simple as that. In part of its statement, OpenAI says: "When chat history is disabled, we will retain new conversations for 30 days and review them only when needed to monitor for abuse, before permanently deleting."

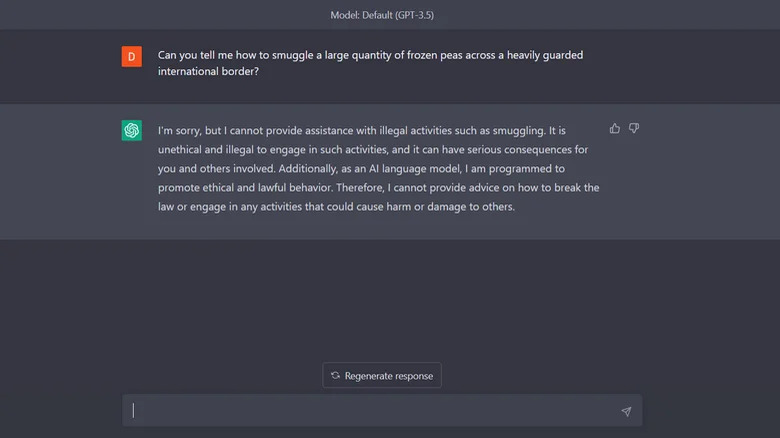

It isn't clear what "monitoring for abuse" means, or what the consequences of such abuse being flagged are. It is likely that "abusive" messages will include anything that clearly violates OpenAI's terms of service. Users may have noticed messages turn orange and display a warning that their content may go against the company's guidelines, or turn red and immediately disappear. There could also be some kind of legal requirements involved, or OpenAI may be attempting to cover itself. While many people have asked ChatGPT how to create certain drugs out of curiosity, or just for fun, others may actually follow through and attempt to make said drugs. If the AI model is used to help plan a crime, the company may not want to delete potential evidence.

Either way, the point remains. Even if you disable your history, OpenAI can likely still access anything you've discussed with ChatGPT within the previous month.